Learning to Evaluate: How an Undergrad Improved Clinical Research Support at Cincinnati Children’s

Post Date: December 4, 2019 | Publish Date:

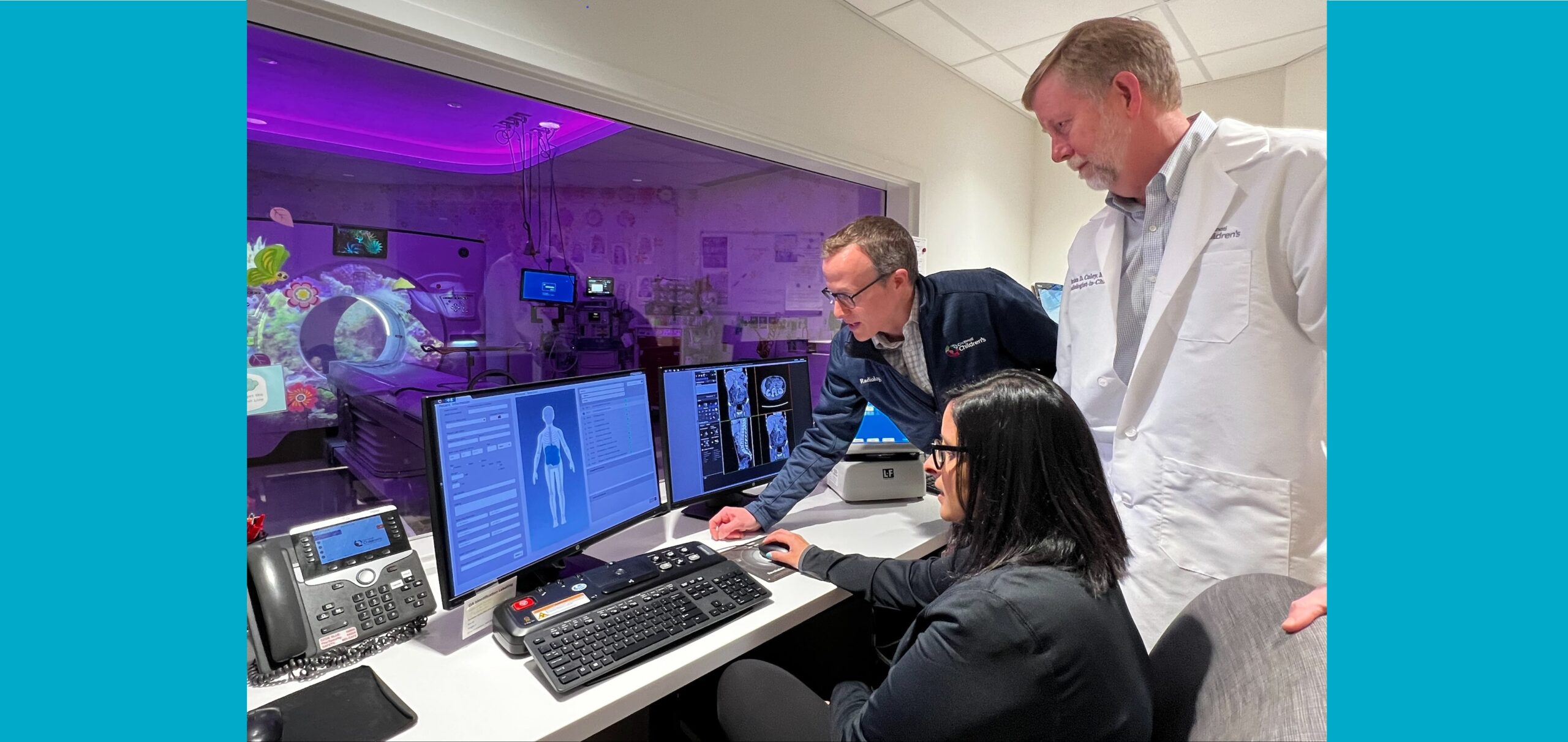

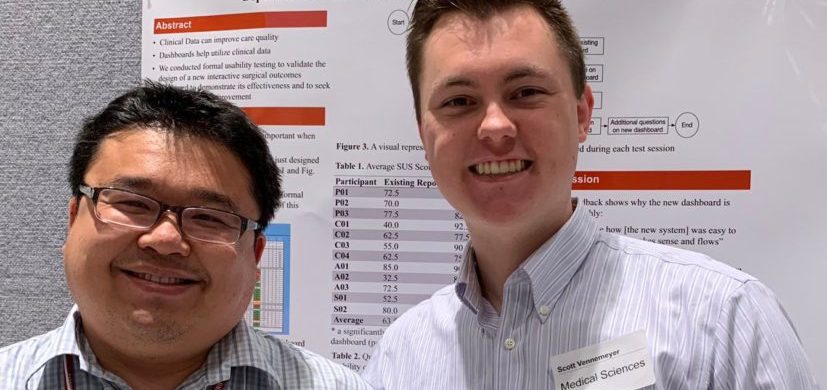

Scott Vennemeyer is studying to join the medical field, but his ambition to impact patient care goes beyond the bench and the bedside. As a student research intern in the Innovative Clinical Data Capture and Use (iCDCU) Laboratory led by Danny T.Y. Wu, PhD, MSI, Vennemeyer is investigating clinical research support methods to improve experiences for physicians, caregivers, and patients.

Vennemeyer’s work in designing usability tests was recently published in Applied Clinical Informatics. The study evaluates an interactive dashboard for surgical quality improvement at the Cincinnati Children’s Heart Institute.

Researching clinical data use

In his first year as a Medical Sciences major at the University of Cincinnati College of Medicine, Vennemeyer began applying for research internships through the Biomedical Research and Mentoring Program (RaMP). The program pairs undergraduate students with mentors from UC and Cincinnati Children’s to gain experience in high-level biomedical research.

When Vennemeyer met with Wu, an Assistant Professor of Biomedical Informatics at UC and Cincinnati Children’s, he knew it was a match.

“Dr. Wu’s iCDCU Lab runs at an exciting pace with biomedical research,” says Vennemeyer. “I was interested in what he was doing with electronic health records and data visualization—making data look presentable and usable for clinicians.”

Now in his third undergraduate year, Vennemeyer has worked in the iCDCU Lab since January 2018. As a research intern, he takes the lead on assigned projects, coordinates student recruitment, and mentors underclassmen and high school students.

“Scott is highly motivated, willing to commit his time, and a team player with great communication skills, all of which are characteristics I am seeking for a research intern,” says Wu.

Hands-on lab experience has allowed Vennemeyer to discover his own research interests—visual analytics, innovative clinical data capture, and usability improvement. So far, his projects have focused on designing clinical research support systems, but the next step is conducting usability testing.

“I’m interested in making sure the systems we use for clinical research and patient education are usable, and that we decrease burden on caregivers and physicians,” says Vennemeyer. “This can have a big impact on clinical care.”

Conducting usability tests with the Heart Institute

In his first paper as second author, Vennemeyer collaborated with researchers at UC and Cincinnati Children’s to put his usability testing skills into practice. The surgical team at the Cincinnati Children’s Heart Institute planned to adopt a new interactive dashboard, and they wanted to assess how well it might work.

Previously, the surgical team used a static Excel spreadsheet to track patient data. The new dashboard was designed to be more dynamic and informative for clinical decisions—they could now search the database for specific ages and symptoms to predict outcomes.

In contrast to previous studies evaluating similar dashboards, the research team’s primary goal was not to evaluate how the tool could potentially improve clinical operations or care quality. Their approach was user-centered: How effective is the dashboard for people who will use it every day, and what can we learn to inform future design processes?

“Designers create the systems, but users might not understand how they work,” says Vennemeyer. “These two groups communicate through the system space. We want to bridge the gap to make it more intuitive.”

Study participants were separated into two groups: dashboard designers and dashboard users. Each completed an interview, questionnaire, and two clinical scenario tasks. Researchers then assessed the usability of the interactive dashboard, which scored significantly higher than the static dashboard.

By comparing the responses of designers and users, researchers were also able to demonstrate gaps in understanding between the two groups. Some features of the interactive dashboard that were designed to improve user experience actually caused usability issues. This highlights the importance of conducting user-centered evaluation before the official launch of a new tool—issues can be corrected earlier, leading to better results in the clinical setting.

“Data visualization allows you to see things in a different way,” says Vennemeyer. “Users are able to focus less on what they’re looking at on the screen, and more on how they can use the tool to improve patient experiences.”

To learn more about experience-based learning in informatics for undergraduate students, contact Danny T.Y. Wu, PhD, MSI, or visit the iCDCU Lab website.